As artificial intelligence becomes more embedded in daily life, its role is evolving beyond convenience and productivity. Increasingly, consumers are exploring AI as a source of emotional support and seeking out tools that offer a listening ear, a neutral perspective, or simply a space to vent. But while interest is growing, so too are questions about trust, empathy, and the limits of machine companionship.

In a report from the Profiles team at Kantar, Connecting with the AI Consumer, new insights are shared into how global consumers are leveraging AI-based tools in different ways. This research includes opinions from more than 10,000 consumers from ten countries and highlights how global consumers are using AI today and what they’re looking for next.

A New Frontier for AI

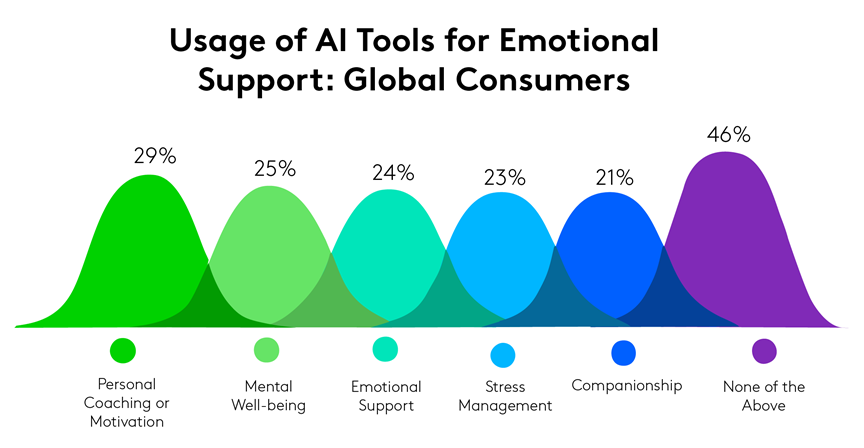

Although emotional and mental support are not yet the most common use cases for AI, the landscape is shifting. In fact, according to Kantar Profiles’ global study, 54% of global consumers indicate having used AI for at least one emotional or mental well-being purposes measured below:

Among the emotional applications measured, personal coaching or motivation (29%) tops the list, followed closely by mental well-being support (25%)—a sign that consumers are looking to AI not just for answers, but for encouragement and emotional guidance.

This kind of engagement is especially prevalent among younger generations: 35% of Gen Z and 30% of Millennials say they’ve used AI for emotional support, compared to just 14% of Gen X and 7% of Boomers. As AI tools become more conversational and adaptive, younger users appear more open to turning to them in moments of stress, reflection, or personal growth.

When asked how comfortable they would be discussing personal or emotional details with an AI tool, 41% of global consumers say they felt somewhat or very comfortable. This signals a growing openness to AI as a confidant or support mechanism, especially as tools become more context-aware.

Trust, Empathy, and the Human Touch

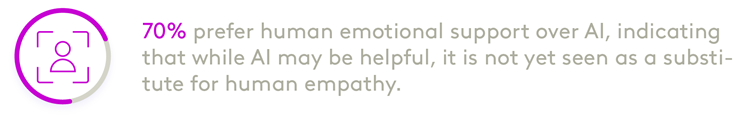

Despite there being a general openness to AI, emotional authenticity remains a critical differentiator.

A strong majority (70%) still prefer human emotional support over AI. This sentiment is especially pronounced among Boomers (78%), while Gen Z shows the lowest preference for human support at 65%, suggesting generational nuances in comfort with AI companionship.

Additionally, 60% of global consumers agree that AI lacks the empathy needed for human-like support. And while some are willing to engage with AI in specific contexts, many remain cautious about its limitations.

So, while there’s growing interest in AI as a support tool, many consumers still question its emotional depth and prefer human connection when it really matters.

The Role of AI as a Non-judgemental Companion

Despite these concerns, AI emotional support tools are gaining traction when framed as nonjudgmental, always-available companions. Consumers are not necessarily looking for AI to replace human relationships, but rather to supplement them in moments of solitude, indecision, or emotional overload.

According to the data, 34% of global consumers say they would turn to AI when facing a difficult decision and needing a neutral perspective, highlighting a desire for unbiased input during times of uncertainty. Additionally, 32% would use AI when they need someone to talk to but don’t want to burden others, and 30% say they would rely on AI to vent without fear of judgment.

These findings suggest that while AI may not replace human connection, it is increasingly valued for offering a safe, impartial space for emotional expression.

Privacy and Data Security: Common Concerns

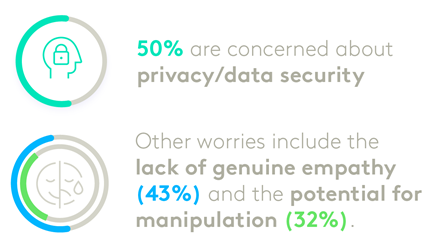

While consumers increasingly turn to AI for its neutrality and nonjudgmental presence, these emotionally intimate interactions raise important questions about trust. Sharing personal thoughts, struggles, or sensitive decisions with a digital tool requires confidence that those details will remain private and protected.

When asked about their concerns with AI emotional support tools, privacy and data security (50%) top the list among global consumers. Other key concerns include AI’s lack of genuine empathy (43%) and the potential for emotional manipulation (32%).

These concerns are especially pronounced among Boomers and in markets such as Australia and South Africa, underscoring the importance of building emotional AI experiences that are not only helpful, but also secure and transparent.

What This Means for Brands

For brands, marketers, and researchers, the takeaway is clear: emotional engagement with AI is on the rise, but it’s not without friction. Brands that want to explore this space must tread carefully, balancing innovation with transparency, and automation with authenticity.

As AI continues to evolve, so too will consumer expectations. Understanding the emotional dimensions of AI engagement will be key to building trusted, human-centric experiences that resonate across generations and cultures.

Get more answers

For more findings from this study, access the complete Connecting with the AI Consumer report. Here you’ll find additional insights into how global consumers are turning to AI, the trade-offs they’re willing to make, and what they expect in the future.

About this study

This research was conducted online among more than 10,000 consumers across ten global markets (including Australia, Brazil, China, France, Germany, India, Singapore, South Africa, the UK, and the US between April 11th through May 2nd, 2025. All interviews were conducted as online self-completion and collected based on controlled quotas evenly distributed between generations and gender by country. Respondents were sourced from the Kantar Profiles Respondent Hub.