We live in a word where AI achievements make the news daily. From personalised recommendations to predictive assistants, AI-powered experiences are becoming more intuitive and pervasive. But as these technologies grow more sophisticated, so do consumer concerns about how their data is being collected, used, and protected.

At its core, privacy is about giving people control over their personal information and how it is used. When companies use data without clear consent, they risk eroding trust and damaging long-term relationships.

In a report from the Profiles team at Kantar, Connecting with the AI Consumer, new findings reveal how global consumers are navigating the tension between innovation and intrusion. Drawing from the perspectives of over 10,000 individuals across ten countries, the report explores how people feel about privacy and data sharing with AI technology —and what brands should consider in order to earn and maintain their trust.

For the purpose of this article, Gen Z is identified as ages 18-27, Millennials ages 28-43, Gen X ages 44-59, and Boomers ages 60+.

What data do global consumer believe AI is collecting?

There’s no shortage of opinions about AI—what it is, what it can do, and how it’s being used. But one thing is clear: AI technology depends on data to function. And often, it’s the kind of data that feels personal.

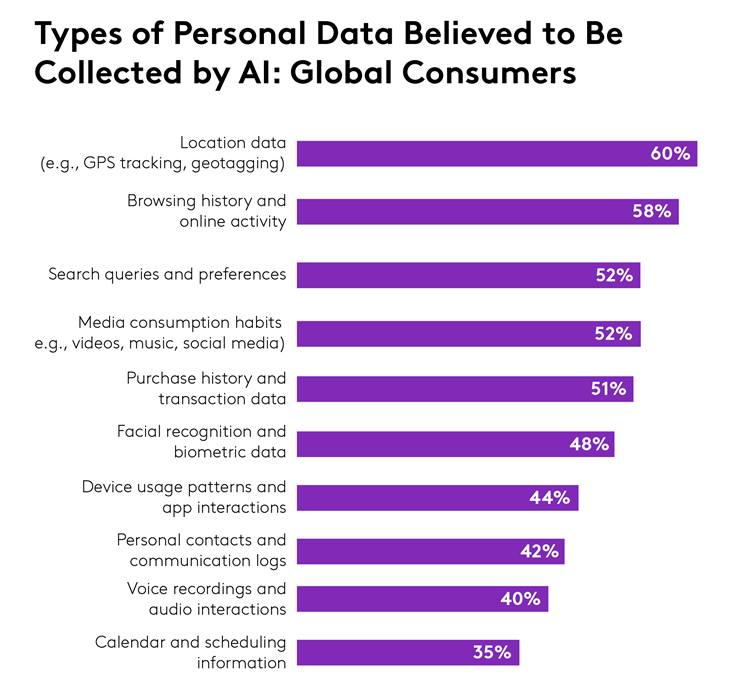

When we asked global consumers what types of personal data they believe AI collects, the top responses were: location data (60%), browsing history (58%), search queries (52%) and media consumption habits (52%). Many also assumed AI collects facial recognition data (48%), voice recordings (40%), and even calendar information (35%).

The perception that AI tools collect highly personal information is widespread—and it varies by country. For instance, consumers in South Africa are more likely to believe all types of personal data measured are collected by AI compared to other countries.

Location data is seen as the most commonly collected type across all age groups, especially among Boomers (67%) and Gen X (63%).

But here’s where perception and reality diverge. When we look at the types of data collected by tools like OpenAI’s ChatGPT, the list is much narrower.

OpenAI collects:

- User content (like prompts and uploaded files)

- Log data (such as IP address and browser type)

- Usage data (like what content is viewed)

- Device and location information, and Cookies

What’s not included: facial recognition, voice recordings, or access to calendar data—unless the user specifically connects those tools.

The implication here is that most consumers are already assuming that AI tools are collecting personal data, especially sensitive types like location and browsing behaviour. Whether that’s accurate in all cases or not, perception is reality when it comes to trust. For brands, this suggests AI features must be introduced with transparency front and centre. Clearly communicating what data is being collected, why, and how it's used can help prevent skepticism from turning into avoidance. When people don’t know what’s being collected, they tend to assume the worst.

This concern about data collection ties into a broader unease about AI’s role in society.

AI’s impact on society

While many consumers are intrigued by the convenience AI offers, that curiosity is often tempered by deeper worries about job displacement, misinformation, and a general lack of control.

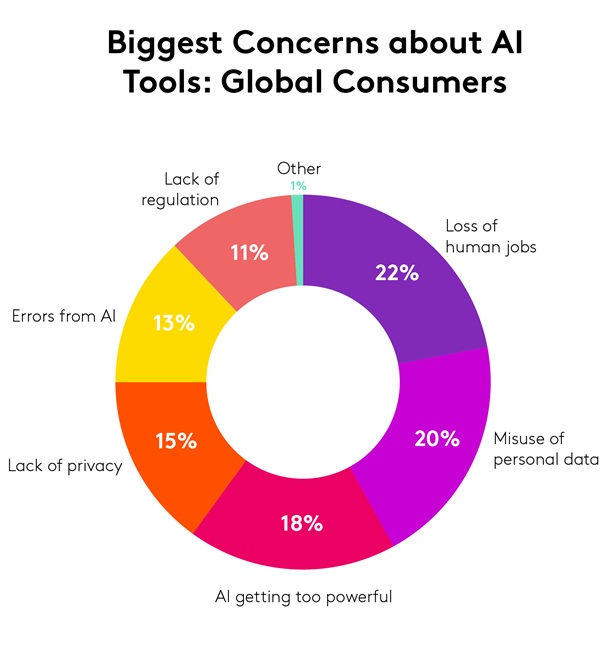

When we asked consumers what their biggest concern is when using AI tools, the top issue globally was the loss of human jobs, cited by 22% of respondents.

This concern was especially high in South Africa (33%), the U.K. (26%), and France (26%), reflecting anxiety about automation replacing human roles.

The second most common concern was the misuse of personal data at 20% overall, with higher sensitivity in countries like Brazil (24%), Singapore (23%), and China (22%).

Interestingly, fears about AI becoming too powerful were more pronounced in Germany (25%) and India (22%), suggesting deeper concerns about control and oversight.

Global consumer apprehensions around job displacement and data misuse suggest that people want to feel in control when using AI. Brands should consider focusing on designing AI tools that enhance autonomy and support decision-making, rather than taking over entirely.

Whether it's through opt-in controls, adjustable settings, or clear explanations of how outputs are generated, empowering users with choice and clarity can possibility aid in building long-term trust.

AI’s accuracy

Beyond job concerns and data misuse, another key factor shaping perceptions of AI is its accuracy.

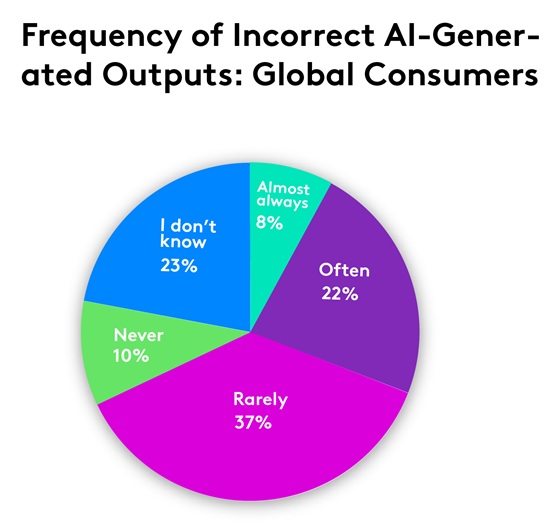

We asked global consumers how often they encounter false or incorrect AI generated outputs. Surprisingly, nearly half (47%) say they rarely or never encounter false of incorrect AI generated outputs.

This sentiment is especially strong in China (69%), Brazil (55%), and South Africa (60%), where confidence in AI accuracy appears relatively high.

However, a significant portion (30% globally) report encountering errors often or almost always. Encountering errors is most pronounced in India (41%), Singapore (35%), and Australia (33%), suggesting that in some regions, users are more frequently running into quality or reliability issues.

What’s also striking is the level of uncertainty: 23% of respondents say they don’t know how often they encounter incorrect outputs.

The main takeaway here is that while many users are having a positive experience, a sizable group still encounters inaccuracies. Building trust will require not just improving output quality but also helping users better understand and evaluate what AI produces.

What this means for brands

Consumers around the world are engaging with AI more regularly but they’re also asking harder questions. They want to know what’s being collected, how it’s being used, and what it means for them. Trust is built not only through innovation, but through thoughtful, transparent design.

To create AI-powered experiences that resonate, consider the following actions:

- Communicate clearly about data collection practices

- Empower users with choice and control

- Design for transparency, especially when automation is involved

- Help users assess and understand output accuracy

Get more answers

For more findings from this study, access the complete Connecting with the AI Consumer report. Here you’ll find additional insights into how global consumers are turning to AI, the trade-offs they’re willing to make, and what they expect in the future.

About this study

This research was conducted online among more than 10,000 consumers across ten global markets (including Australia, Brazil, China, France, Germany, India, Singapore, South Africa, the UK, and the US between April 11th through May 2nd, 2025. All interviews were conducted as online self-completion and collected based on controlled quotas evenly distributed between generations and gender by country. Respondents were sourced from the Kantar Profiles Respondent Hub.